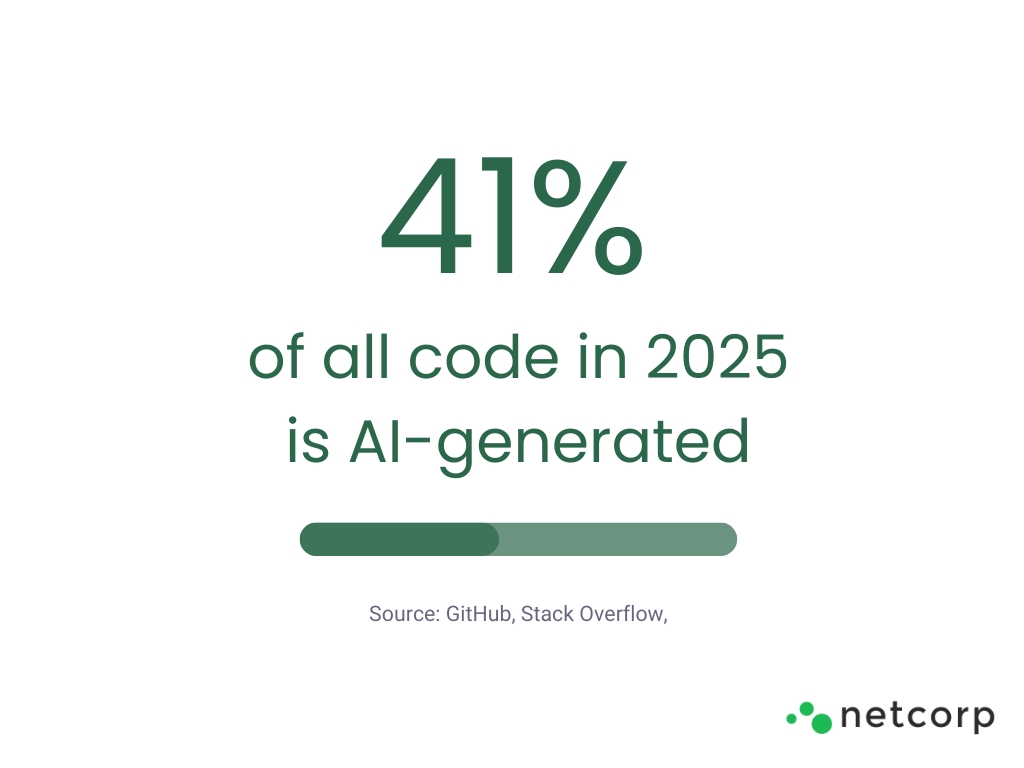

A seismic shift is unfolding fast: in just the past 6 months, nearly half of all code written in 2025 is AI-generated, yet paradoxically, demand for human developers remains stronger than ever.

We gathered all the current AI-generated code statistics, trends, and expert predictions, to help you make future-proof decisions about engineering budgets and team structure. We’ll break down:

1. Where AI development tools outperform traditional workflows

2. Which platforms dominate the AI coding landscape

3. Where human developers are still critical, and where to combine AI and human with smart outsourcing

By the end, you’ll have a clear picture of where AI adds value, and where it’s not ready to take over (yet).

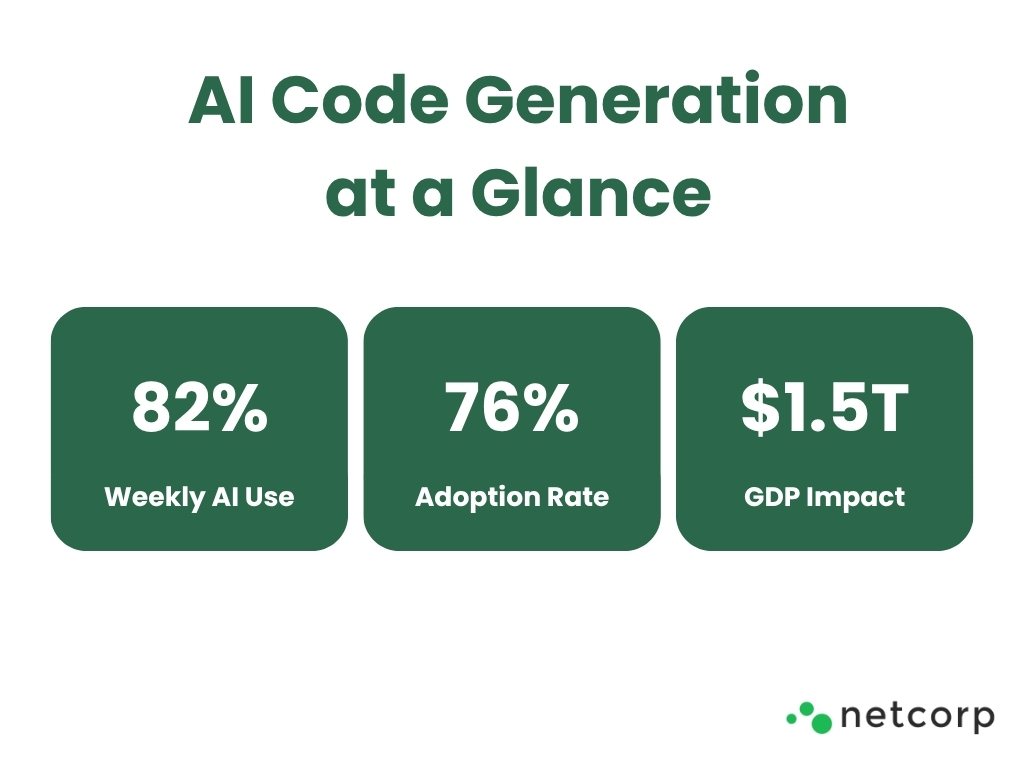

According to recent global estimates, 41% of all code is now AI-generated, with 76% of professional developers either using (62%) or planning to use (14%) AI coding tools. Moreover, in a recent developer surveys:

1. 82% of devs say they use AI coding tools daily or weekly.

2. 59% run three or more AI tools in parallel.

3. 65% report that AI touches at least a quarter of their codebase.

4. 78% say AI improves productivity, and 57% say it makes their job more enjoyable.

Even tech giants like Google are leaning in. As of early 2025, 25% of Google’s code is AI-assisted, but according to Google CEO Sundar Pichai on the Lex Fridman Podcast, the real focus is on engineering velocity:

“The most important metric... is how much has our engineering velocity increased as a company due to AI... Our estimates are that the number is now at 10%. We plan to hire more engineers next year because the opportunity space of what we can do is expanding too.”

So yes, AI is generating a lot of code, but that doesn’t mean developers are being pushed out. This is just a shift in how teams work.

As you saw, AI tools are already part of daily workflows for developers across the world. Let’s break down where AI is helping most, and why the humans behind the keyboard still matter more than ever.

According to a survey by Zero to Mastery, a massive 84.4% of programmers have at least some experience with AI code-generation tools. The highest adoption rates are among:

Age also matters: older age groups are less likely to adopt new AI tools in their porcesses. So, developers aged 18 to 34 are twice as likely to use AI in their daily work compared to older age groups.

Between 20-40% of workers are already using AI on the job, and the numbers are even higher in tech-forward fields. Software development, along with marketing and customer service, stands out as one of the fastest adopters, both in usage and investment.

What’s more, 51% of active AI users are part of small teams with 10 or fewer developers, showing that you don’t need a huge budget or headcount to get value from AI. That said, larger companies aren’t far behind: by early 2025, one in four enterprises with 100+ engineers had already moved beyond testing and are actively using AI in their workflows (Source). It’s clear that team size isn’t a barrier, what matters is how teams choose to embrace the tools.

Successful AI adopters share a few traits (Source):

Data also shows a split in company culture (Source):

Meanwhile, 97%+ of developers say they’re already using AI tools on their own, often ahead of company policy.

GitHub’s latest data across four countries reveals where AI tools are most supported and trusted:

Countries like the U.S. and India, where tech adoption moves fast and English is widely used, tend to be more confident in using AI for coding. Developers there are also seeing bigger improvements in code quality. Meanwhile, Germany stands out for its more careful, measured approach to bringing AI into the workflow.

While GitHub’s data shows how developers are using AI to write code, broader adoption patterns depend on more than just tools, they're shaped by public trust, regulatory readiness, and cultural expectations.

The table below expands the lens beyond code generation to explore how different countries approach AI adoption at a societal level, including how much people trust the technology, how prepared their governments are to regulate it, and how cultural values influence acceptance:

Sources: KPMG Global Study 2025; World Economic Forum Study 2025

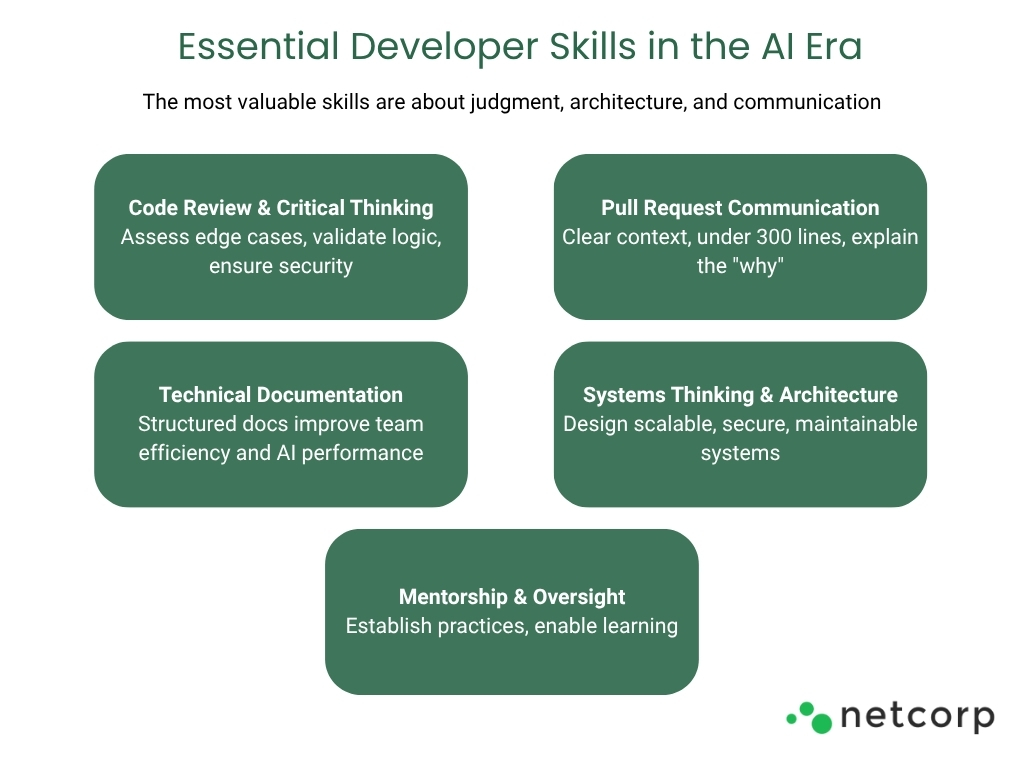

The role of developers is shifting from just writing code to guiding, validating, and optimizing what AI produces. The most valuable skills are no longer just about speed, they’re about judgment, architecture, and communication:

AI boosts speed, but only developers can ensure software is stable, secure, and scalable. Skills like code review, documentation, architectural planning, and team collaboration are what set high-performing developers apart in the AI era.

According to the World Economic Forum’s Future of Jobs Report 2025, 39% of job skills will transform by 2030, and technical talent will need a stronger mix of AI fluency, systems thinking, and soft skills:

So, the most valuable training for developers between now and 2030 includes AI, cloud, cybersecurity, and data analytics, combined with soft skills like analytical thinking, adaptability, and communication.

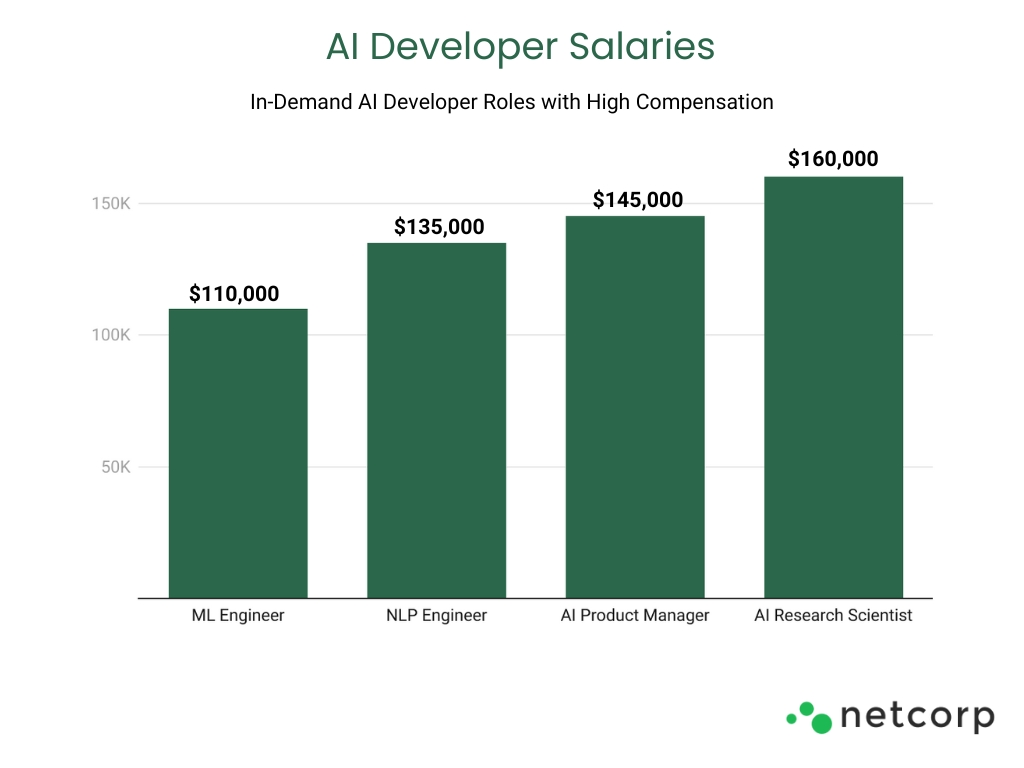

According to recent studies, in 2025, developers with AI expertise are seeing a clear salary advantage:

As experience grows, so does the gap:

The higher pay reflects the specialized skills required and the direct business impact of AI-driven work, from automation to product innovation.

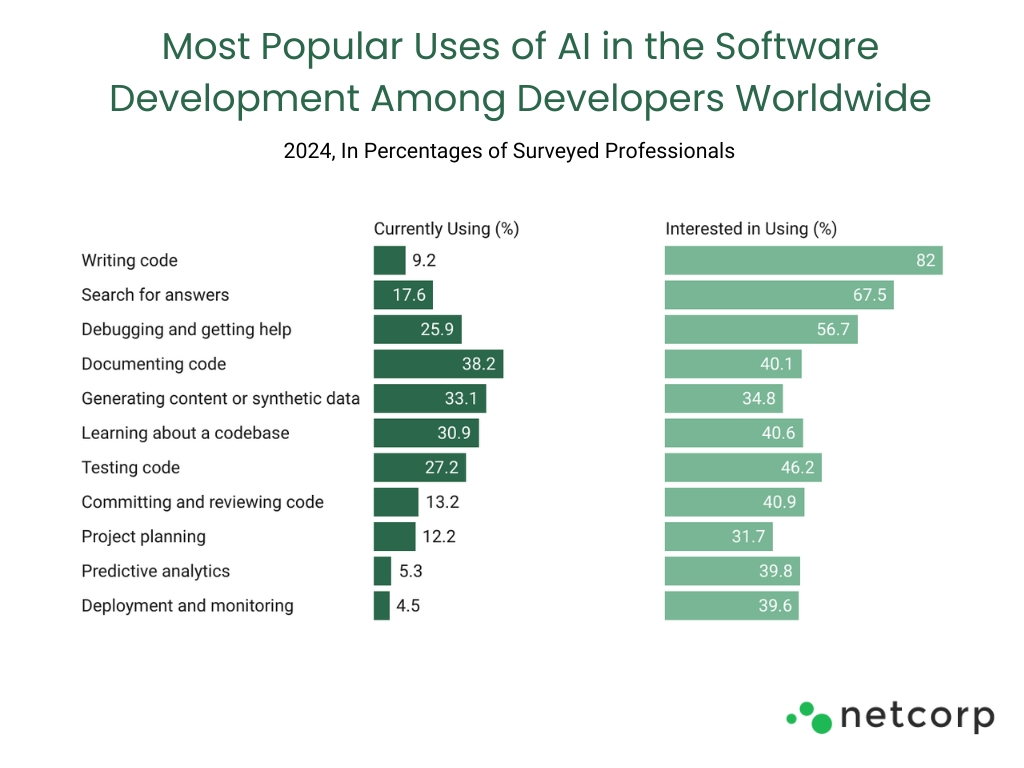

AI is already reshaping how developers work: and the data shows exactly where it’s making the biggest impact. According to recent data, the top two use cases for AI in software development are:

1. Code generation and writing: 82% of developers now rely on AI tools to help write code.

2. Search and problem-solving: 68% of developers turn to AI when they’re stuck: whether they need a quick answer, a code snippet, or help understanding what’s going wrong.

In other words, developers aren’t just using AI to write new code. They’re also leaning on it like a helpful teammate when they hit roadblocks or need a second brain.

And the impact goes far beyond speed. AI is expected to boost overall employee productivity by up to 40%, and 60% of business owners say they believe it will help their teams get more done. Developers agree: in a global survey of over 36,000 developers, the #1 reason for using AI tools was to improve productivity:

.jpg)

Explore more on .

But it’s not just about output. According to McKinsey, developers who use AI tools are twice as likely to report feeling happier, more fulfilled, and regularly entering a “flow” state. It’s a sign that smart AI adoption is also good for team morale.

The promise of AI-generated code is speed, but what about quality? One way to measure this is by looking at what developers actually keep. For example, as seen in Q1 2025 usage data, GitHub Copilot offers a 46% code completion rate, but only around 30% of that code gets accepted by developers. In other words, nearly 30% of AI suggestions are good enough to use, but the rest get tossed.

There's more to code quality than just acceptance rates. According to GitClear’s 2024 report, which analyzed over 153 million lines of code, AI tools may be quietly changing how we write and maintain software. Their research uncovered a few major trends:

1. Code duplication is spiking: AI-assisted coding is linked to 4x more code cloning than before.

2. Copy/paste is now more common than code reuse: For the first time in history, developers are pasting code more often than they're refactoring or reusing it.

3. Short-term churn is up, DRY is down: There's a noticeable increase in short-lived code and a decline in "moved" lines, suggesting modular, maintainable code is taking a backseat.

GitClear sums it up well: “AI-generated code resembles an itinerant contributor, prone to violate the DRY-ness of the repos visited.”

We can conclude that AI tools can absolutely help you move faster, but without guardrails, that speed may come at the cost of clean architecture, maintainability, and long-term scalability.

In just the past year, Python has surged to the top of global developer usage, largely driven by the growth of AI and machine learning. Meanwhile, JavaScript remains the most-pushed language on GitHub, with a 15% spike in packages on npm. Here’s how AI performs across the most common programming languages, and where it shines vs. where it still struggles.

AI is great at handling repetitive or rules-based coding tasks, and 41% of business owners now expect AI to help fix coding errors effectively.

Let’s break it down by language (Source):

What AI can do:

AI in Action: Trained on massive SQL datasets, AI understands schema structures and can generate queries based on your prompt, even for advanced reporting.

What AI can do:

AI in Action: With exposure to millions of websites, AI can quickly generate user-friendly interfaces that look good and work well across devices.

What AI can do:

AI in Action: AI helps remove the guesswork from setup files, reducing human error and saving time when working with complex environments like Kubernetes.

What AI can do:

AI in Action: Python’s massive library ecosystem makes it a favorite for AI-generated code, especially for scripting, automation, and ML prototyping.

What AI can do:

AI in Action: Whether you're working on a simple website or a full-stack app, AI can take care of boilerplate code and help move projects forward faster.

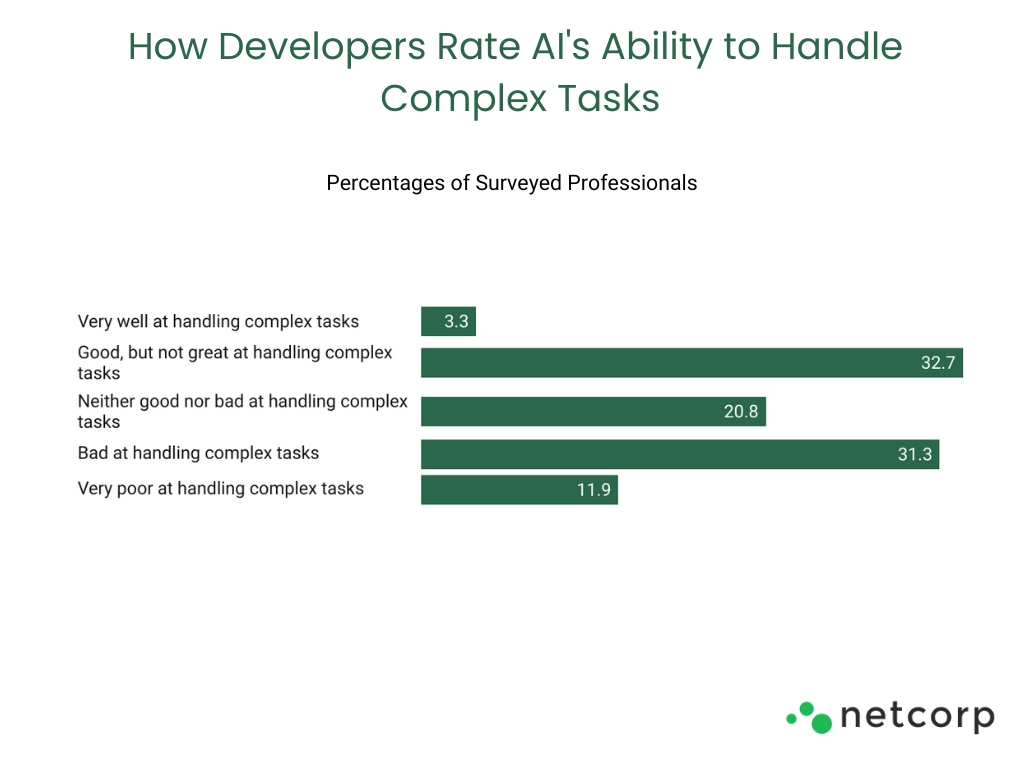

Even the smartest tools have limits, and AI still falls short when it comes to complex, strategic development work (Source):

Robust error handling and edge case coverage

AI is excellent at coding’s version of “paperwork,” the repeatable part of the job. But your real competitive edge still comes from the high-stakes, nuanced code AI can’t write (yet).

Check the latest software outsourcing stats here →

The AI code generation market is booming. Now it’s valued at $4.91 billion in 2024, and is projected to hit $30.1 billion by 2032, growing at a rapid 27.1% CAGR (Source). There are several powerful tools behind this growth that shape how developers work:

All these tools differ in how they integrate, what they understand, and who they’re built for (Source).

There are a few tasks that all those tools do well:

These tools shine when you need to move fast or automate the tedious parts of development. They still cannot replace areas, where human thinking is important:

As the most widely adopted AI coding tool, GitHub Copilot is now a daily companion for many developers:

But what does that look like in practice?

One detailed user review describes Copilot as “helpful but not great.” The tool shines in small, repetitive tasks, quickly generating clean methods and adapting to a developer’s coding style. Its “ghost text” suggestions often feel impressively intuitive, saving time and mental energy.

However, issues arise with more complex or large-scale work. Reviewers report syntax errors in C#, hallucinated methods, and trouble working across multiple files. It can also behave unpredictably, sometimes even deleting live code when adding comments.

What we can conclude is: copilot is a sidekick, not a co-founder!

It’s great for quick functions, but break it out of the sandbox and it struggles. That’s why developers still drive the ship, and Copilot just rides shotgun.

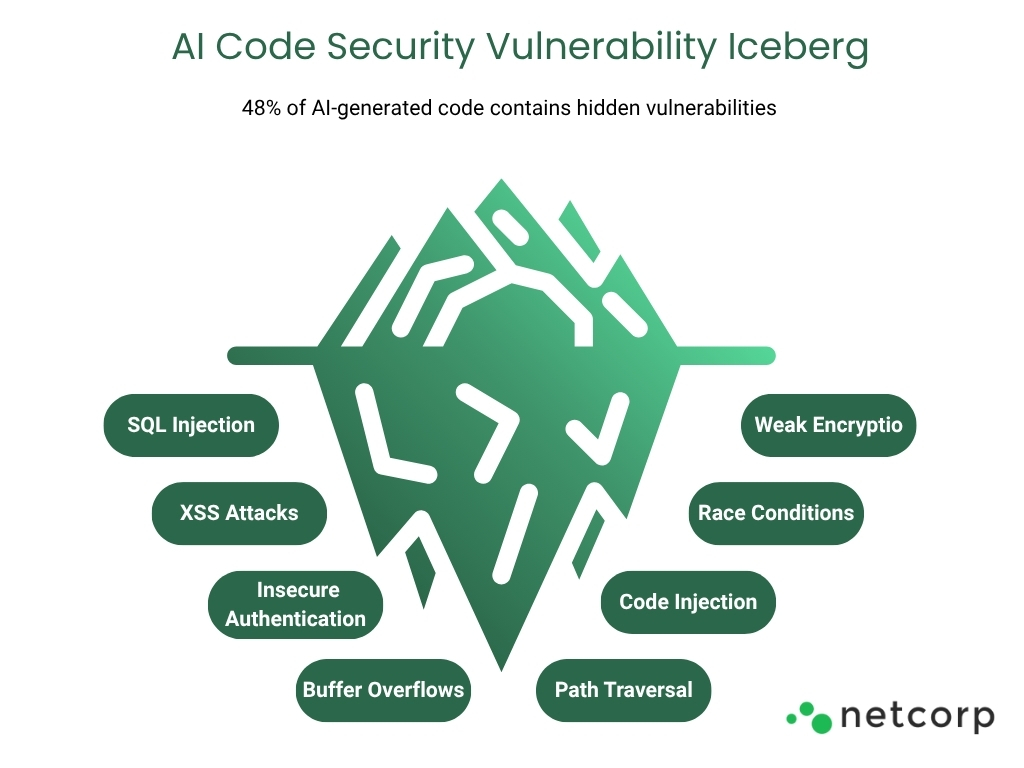

AI-generated code surely provides speed, but it comes with trade-offs: multiple security issues and risks that you need to deal with. Recent studies show that at least 48% of AI-generated code contains security vulnerabilities. Earlier research on GitHub Copilot found similar issues, with 40% of generated programs flagged for insecure code.

It’s even riskier at the API level: 57% of AI-generated APIs are publicly accessible, and 89% rely on insecure authentication methods. That’s a serious liability in production environments.

AI might help you ship faster, but you could be paying for it later.

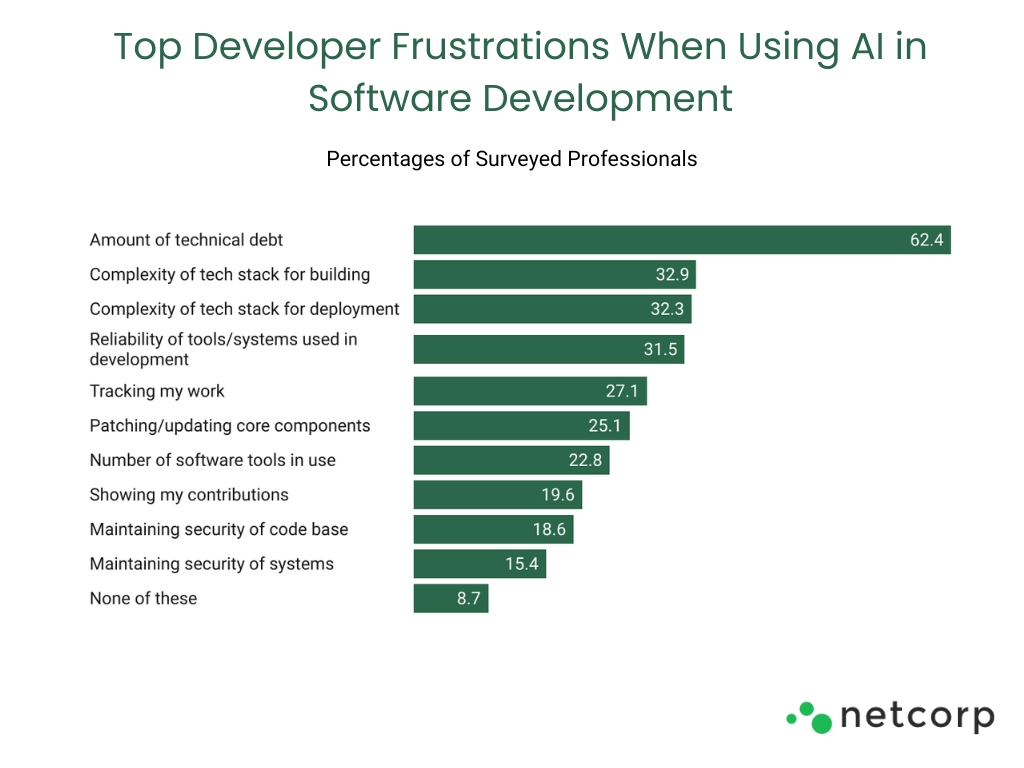

1. Delivery Stability Drops: According to Google’s 2024 DORA report, increased AI use speeds up code reviews and documentation, but comes with a 7.2% decrease in delivery stability.

2. Rising Technical Debt: Developers already spend a big chunk of their time cleaning up old code, and AI doesn’t always make that easier. In fact, if no one’s keeping an eye on things, it can quietly pile on more mess. Over time, that quick win today can turn into a maintenance headache tomorrow.

When your AI system controls a power grid or manages patient medications, "move fast and break things" isn't an option. Critical infrastructure operators are discovering that AI adoption comes with stakes that extend far beyond quarterly profits.

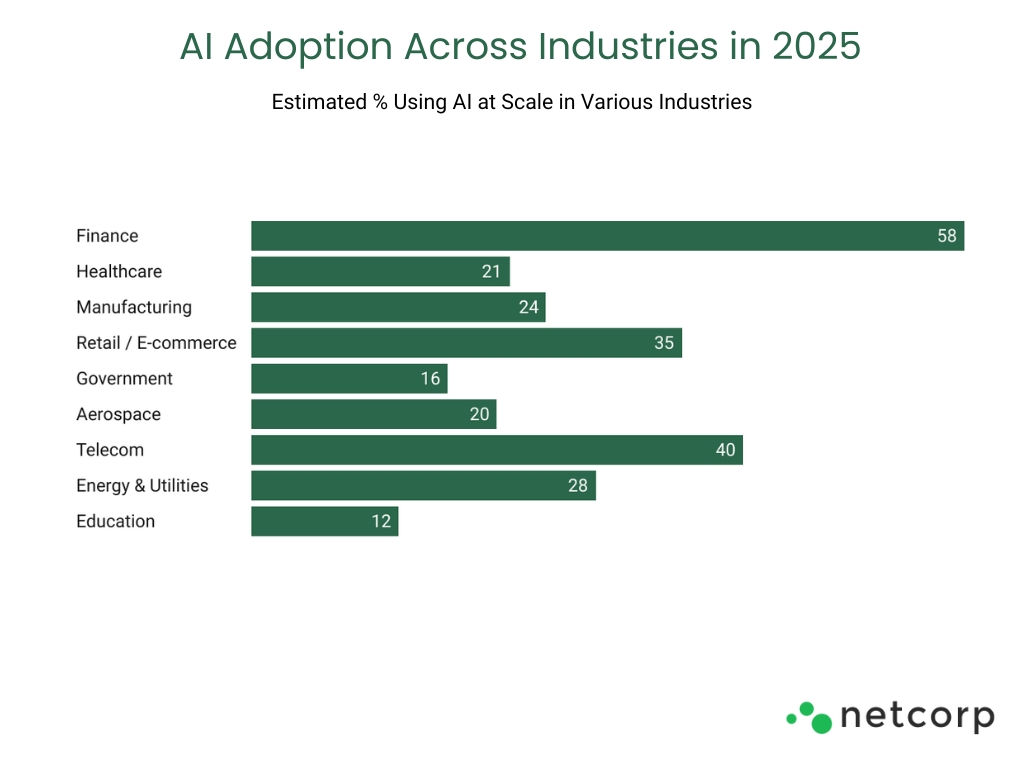

The numbers tell an interesting story. While healthcare and manufacturing AI adoption is still growing, financial services are charging ahead: 58% of finance functions now use AI, up 21 percentage points from 2023. Besides finance, other industries have also started adopting AI in their daily use:

Sources: McKinsey Global Survey on AI (2025); Deloitte 2025 AI in Financial Services Report; IBM Global AI Adoption Index (2025)

In 2025, the Trump administration scrapped Biden’s AI safety measures and hit the gas on deployment. The new approach is all about speed: agencies are now expected to bring in AI fast, even in high-risk areas, with less focus on oversight and more on keeping up. Each agency now has to appoint a Chief AI Officer, but the bigger message is clear: move quickly, and don’t let regulations slow you down.

At the same time, over $90 billion in private investment was mobilized to build AI data centers, often powered by fossil fuels and located on federal land. While innovation is booming, concerns about AI misuse and physical security have taken a back seat. The government’s message is clear: accelerate now, regulate later.

Security teams are dealing with threats coming from two directions. Your AI systems can get hacked or fed bad data. Meanwhile, hackers are using AI to get better at breaking into everything else. Throw quantum computing into this mess and you've got a real problem. Banks, hospitals, and power companies are sitting ducks: quantum-powered attacks could shut down payment systems or steal patient records faster than anyone can react.

The rules are changing faster than legal teams can read them. Companies are stuck making compliance decisions without a roadmap. It's not just extra paperwork, it's creating real problems when you're trying to innovate while keeping regulators happy.

Running AI in critical infrastructure isn't like deploying a new CRM system. When your stuff breaks, real people get hurt. The companies that get this right will have teams who understand both the technology and the industry inside and out. They'll know how to think through worst-case scenarios where AI failure means hospitals go dark or financial systems crash.

There's no room for "we'll figure it out as we go" when millions of people depend on your systems working. Developers are the ones that can make sure you don’t have this problem, but we’ll address this later.

GitHub's research shows AI developer productivity could boost global GDP by over $1.5 trillion, demonstrating the massive economic potential of AI-powered development tools. Microsoft’s Q1 2025 market study reveals AI investments are now returning an average of 3.5X, with 5% of companies reporting returns as high as 8X. But let’s go deeper into what it really means in practice:

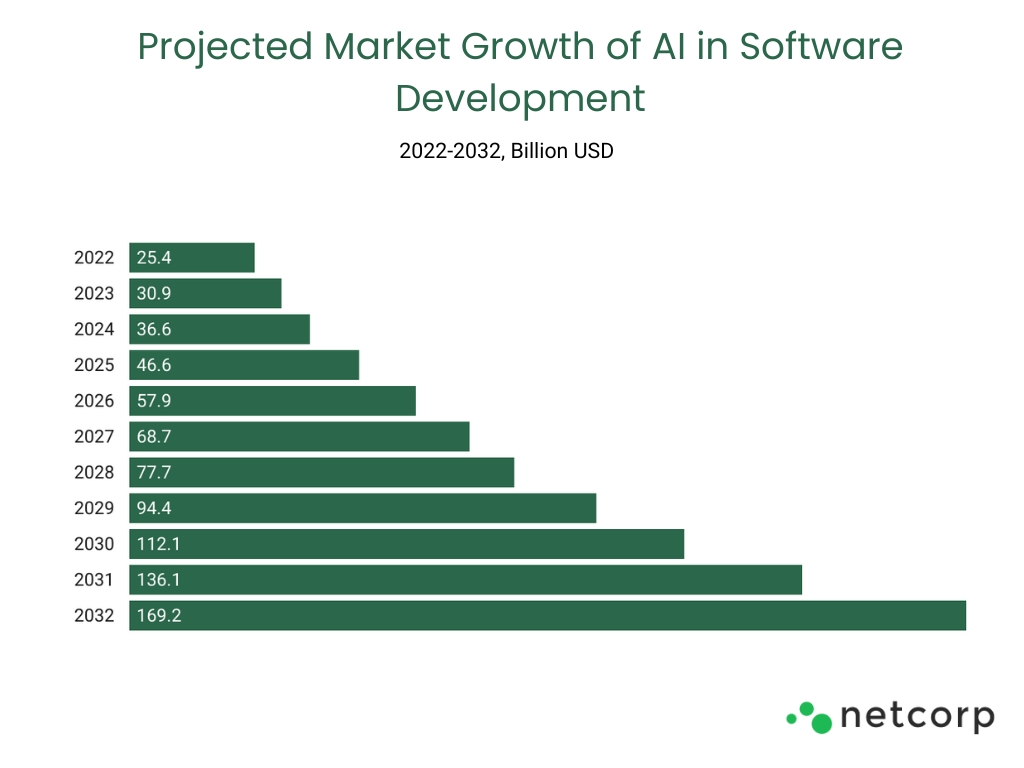

The whole industry itself is expected to reach USD 169.2 Billion by 2032:

Less than half (47%) of IT leaders said their AI projects were profitable in 2024, with one-third breaking even and 14% recording losses. Despite these mixed results, nearly two-thirds (62%) of all respondents indicate they are increasing their AI investments in 2025.

Even companies that haven’t hit ROI yet are doubling down. Why? Because they know: AI isn’t a silver bullet, it’s a smart bet. The teams investing wisely now will win later.

A recent MIT study signals where AI code generation is going, and it closely aligns with what the market is demanding.

The focus is shifting from just working code to smart code: code that’s not only syntactically correct, but also does exactly what the user intended. MIT’s approach helps AI models write more accurate, meaningful code by running multiple versions at once and choosing the best one, kind of like a smart brainstorming session.

Even more interesting, smaller open-source models using this method outperformed some of the biggest commercial tools. That’s a strong sign that smarter is beating bigger in the AI race.

This smarter AI doesn’t just help developers, it opens the door for non-tech folks too. We’re getting closer to a world where anyone can write a SQL query or automation script using just plain English. So, AI is starting to think more like us: not just spitting out code, but understanding what we’re really trying to build.

With headlines about developers struggling to find jobs, even in Silicon Valley, it’s no surprise that panic is spreading. But let’s take a breath and look at the full picture.

That tells us something important: the code quality and long-term impact are still very much in human hands.

So far, AI tools can replace junior developer-level tasks:

However, accuracy even in this level isn’t enough: Even when AI gets it “right,” developers are still cautious. Those who rarely see hallucinations are 2.5x more likely to trust the output, but even then, 75% won’t merge the code without manual review. Why? Because what matters isn’t just correctness, it’s whether the code:

Based on hundreds of comments in discussion forums like Reddit and Quora, the overall sentiment among professional developers is nuanced, not extreme. One developer on Reddit put it really well:

“It’s like having a good intern/early in career dev that happens to churn out something decent right after you ask. It’s still up to you to review it, etc.”

Here's the distilled consensus, beyond this idea:

AI enhances productivity, but is not a replacement: Experienced developers overwhelmingly see AI tools as helpful for boilerplate, testing, and mundane tasks, but not capable of replacing deep problem-solving, design, or architecture.

1. Effective use of AI will definitely give you an edge, but it doesn’t make weak devs stronger by default.

2. Junior developers are at risk: Many fear that newer engineers relying too heavily on AI are not building fundamental skills, making them less competent long term.

3. Executive overconfidence in AI is dangerous: A common theme is frustration with managers misinterpreting AI efficiency as justification for layoffs, even when it leads to technical debt or security issues.

4. The AI hype is real, and overblown: Developers consistently say marketing oversells AI's capabilities. It can save time, but not think or reason like a human.

5. AI is best used by experienced engineers as a sort of "robotic intern," useful, but in need of constant supervision.

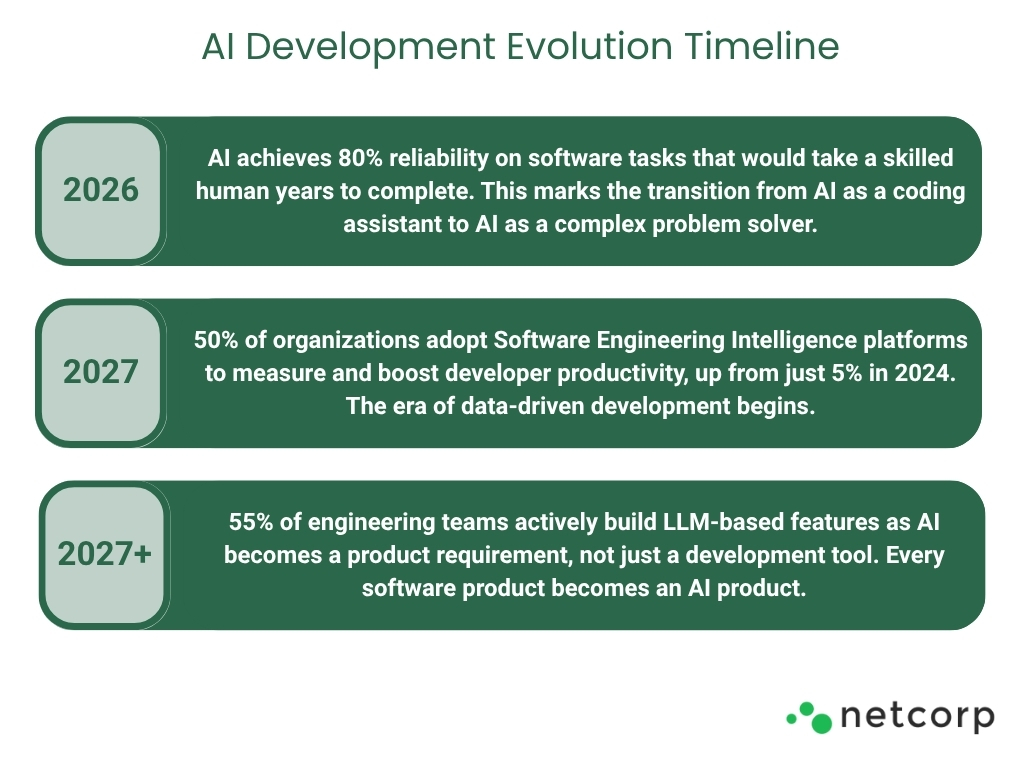

By 2026, approximately more than 80% of enterprises will have used generative AI APIs or deployed generative AI-enabled applications. Unlike today's focus on boilerplate, AI will start handling business logic and multi-component integration.

Junior roles will pivot to become "AI Engineering Coordinators," professionals who (Source):

Gartner predicts 80% of the engineering workforce will need upskilling through 2027 specifically for AI collaboration skills.

Here's an expected timeline for complex task automation:

The biggest shift won't be AI replacing developers. It'll be Software Engineering Intelligence (SEI) platforms becoming standard. These platforms provide data-driven visibility into engineering teams' use of time and resources, automatically optimizing human-AI workflows.

This means by 2026, most development work will happen within AI-managed environments that continuously optimize task allocation between humans and machines.

AI has totally changed how we write code. However, we can’t yet say that it’s replacing developers. AI can help, but it’s not building solid, secure software on its own.

That’s why having the right team still matters. At Netcorp, we help companies use AI tools wisely: pairing speed with solid engineering so nothing breaks down later. If you want to build smarter, safer, and future-ready software, we’re here to help.

If you’re working closely with the code, you can usually get a gut feeling, some parts just don’t read like something a person would write. The names might be weirdly vague, or the logic feels too copy-paste. There are tools that try to flag this stuff, but honestly, it’s more about noticing patterns that don’t fit than finding some clear “AI wrote this” stamp.

Not exactly. According to Google CEO Sundar Pichai, around 25% of Google’s code is AI-assisted, meaning AI tools helped generate parts of it, but humans are still reviewing, editing, and integrating that code. The focus is on boosting engineering speed, not fully replacing developers.

Yes, according to Microsoft CEO Satya Nadella, AI now writes about 20–30% of the code in some of their projects. But that doesn’t mean a third of all Microsoft code is AI-made. He was referring mostly to new code in active projects, and the percentage can vary a lot depending on the programming language or type of work being done.

https://www.mdpi.com/1999-4893/17/2/62/

https://medium.com/@mark.pelf/github-copilot-gen-ai-is-helpful-but-not-great-march-2025-db655ca50c53/

https://news.mit.edu/2025/making-ai-generated-code-more-accurate-0418/

https://www.pwc.com/us/en/tech-effect/ai-analytics/ai-predictions.html

https://socket.dev/blog/python-overtakes-javascript-as-top-programming-language/

https://www.softwareone.com/en/now/cloud-skills-report